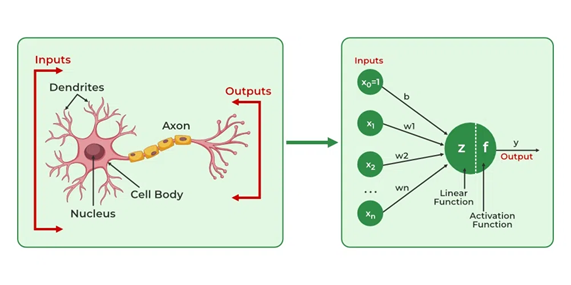

Artificial Neural Networks (ANNs) are a subset of machine learning inspired by the structure and function of the human brain. They are composed of interconnected nodes, or neurons, that process information. ANNs have revolutionized various fields, from image and speech recognition to natural language processing and autonomous vehicles.

How Artificial Neural Networks Work?

The operation of ANNs is based on the following key components and processes:

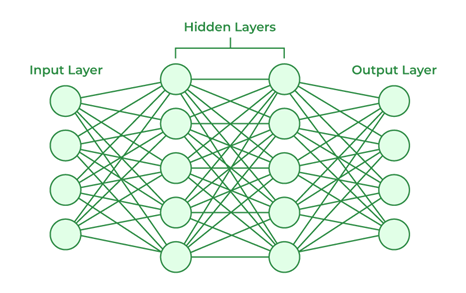

1. Structure of ANNs:

-

-

- Input Layer: This is the first layer that receives input data. The number of neurons in this layer corresponds to the number of features in the input dataset.

- Hidden Layers: These intermediate layers process the input data. ANNs can have one or more hidden layers, and each neuron in these layers applies a transformation to the inputs it receives.

- Output Layer: The final layer produces the output of the network, which can be a prediction or classification result.

-

2. Neurons and Activation Functions: Each neuron receives inputs, processes them through weighted connections (synapses), and applies an activation function to determine its output. The activation function introduces non-linearity into the model, allowing it to capture complex patterns in data. Common activation functions include sigmoid, ReLU (Rectified Linear Unit), and tanh.

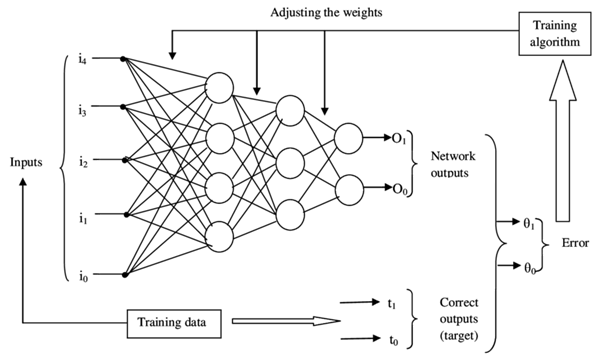

3. Learning Process: ANNs learn through a process called training, where they adjust the weights and biases between neurons to minimize the error between their predictions and the actual values.

-

-

- Forward Propagation: During this phase, input data is passed through the network layer by layer until it reaches the output layer. Each neuron’s output is calculated based on its inputs and weights.

- Loss Calculation: The network’s output is compared to the actual target values using a loss function, which quantifies the error in predictions.

- Backpropagation: This is a key learning algorithm where the network adjusts its weights based on the error calculated. It propagates the error backward through the network, updating weights to minimize loss using optimization techniques like gradient descent.

-

Types of Artificial Neural Networks

There are several types of ANNs, each suited for different tasks:

1. Feedforward Neural Networks (FNN): Information flows in one direction from input to output without cycles. Commonly used for classification and regression tasks.

2. Convolutional Neural Networks (CNN): Primarily used for image processing tasks, CNNs utilize convolutional layers to automatically detect features in images, making them ideal for tasks like image recognition and segmentation.

3. Recurrent Neural Networks (RNN): RNNs have loops allowing information to persist, making them suitable for sequential data processing such as time series analysis and natural language processing.

4. Long Short-Term Memory Networks (LSTM): A special type of RNN designed to remember information for long periods, LSTMs are effective for tasks involving long sequences, such as speech recognition and language modeling.

5. Generative Adversarial Networks (GAN): Comprising two networks—a generator and a discriminator—GANs are used for generating new data samples that resemble training data, applicable in image generation and style transfer.

Applications of ANNs

ANNs have numerous applications across various industries:

-

- Healthcare: ANNs are used for medical image analysis, drug discovery, and disease diagnosis.

- Finance: Employed for credit scoring, fraud detection, algorithmic trading, and risk management.

- Natural Language Processing: They are employed in language translation, sentiment analysis, and text generation.

- Autonomous Vehicles: ANNs enable self-driving cars to perceive their surroundings, make decisions, and control their movement.

- Image and Speech Recognition: Enabling technologies such as facial recognition systems and virtual assistants that understand spoken commands.

- Recommendation Systems: Driving personalized recommendations on platforms like Netflix and Amazon by analyzing user behavior and preferences.

Challenges in ANNs

While ANNs have achieved significant success, they still face certain challenges:

-

- Data Hunger: ANNs require large amounts of data to train effectively.

- Black-Box Nature: ANNs are often considered “black boxes” as their decision-making processes are difficult to interpret.

- Computational Cost: Training large ANNs can be computationally expensive and time-consuming.

- Overfitting and Underfitting: ANNs can be susceptible to overfitting, where they memorize training data, or underfitting, where they fail to capture underlying patterns.

Way Forward

To address these challenges, researchers and engineers are exploring the following approaches:

-

- Transfer Learning: Leveraging knowledge from pre-trained models to improve the performance of new models.

- Explainable AI: Developing techniques to interpret and explain the decisions made by ANNs.

- Hardware Acceleration: Utilizing specialized hardware like GPUs and TPUs to accelerate training and inference.

- Regularization Techniques: Implementing techniques like dropout and L1/L2 regularization to prevent overfitting.

Conclusion

Artificial Neural Networks have emerged as a powerful tool with the potential to transform various industries. By addressing the challenges and exploring innovative techniques, we can unlock the full potential of ANNs and shape a future where intelligent machines work alongside humans.

Spread the Word